SWI: Speaking with Intent in Large Language Models

Abstract

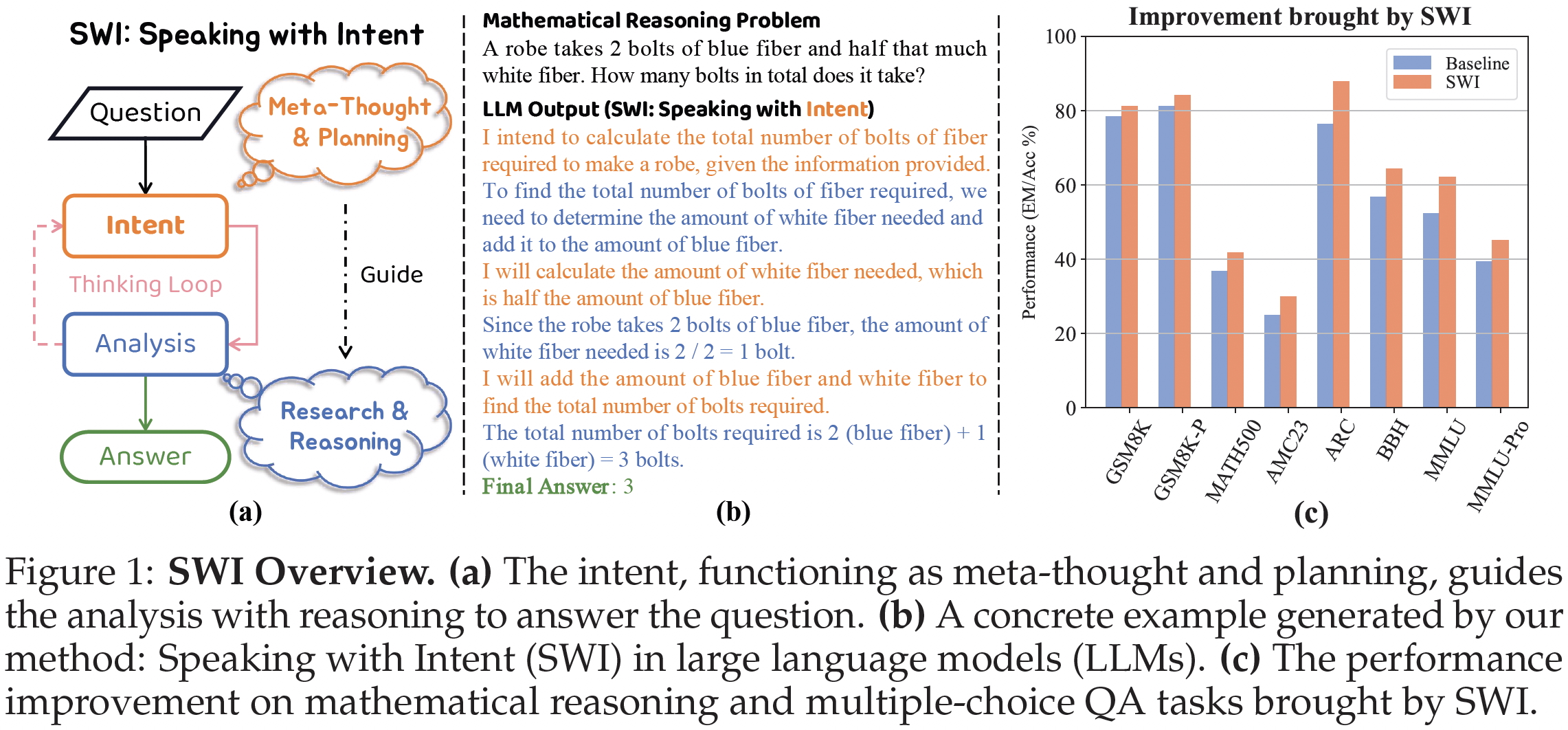

Intent, typically clearly formulated and planned, functions as a cognitive framework for communication and problem-solving. This paper introduces the concept of Speaking with Intent (SWI) in large language models (LLMs), where the explicitly generated intent encapsulates the model’s underlying intention and provides high-level planning to guide subsequent analysis and action. By emulating deliberate and purposeful thoughts in the human mind, SWI is hypothesized to enhance the reasoning capabilities and generation quality of LLMs. Extensive experiments on text summarization, multi-task question answering, and mathematical reasoning benchmarks consistently demonstrate the effectiveness and generalizability of Speaking with Intent over direct generation without explicit intent. Further analysis corroborates the generalizability of SWI under different experimental settings. Moreover, human evaluations verify the coherence, effectiveness, and interpretability of the intent produced by SWI. The promising results in enhancing LLMs with explicit intents pave a new avenue for boosting LLMs’ generation and reasoning abilities with cognitive notions.

@inproceedings{yin2025swi,

title = {SWI: Speaking with Intent in Large Language Models},

author = {Yin, Yuwei and Hwang, EunJeong and Carenini, Giuseppe},

booktitle = {Proceedings of the 18th International Natural Language Generation Conference},

month = oct,

year = {2025},

address = {Hanoi, Vietnam},

publisher = {Association for Computational Linguistics},

url = {https://arxiv.org/abs/2503.21544},

}