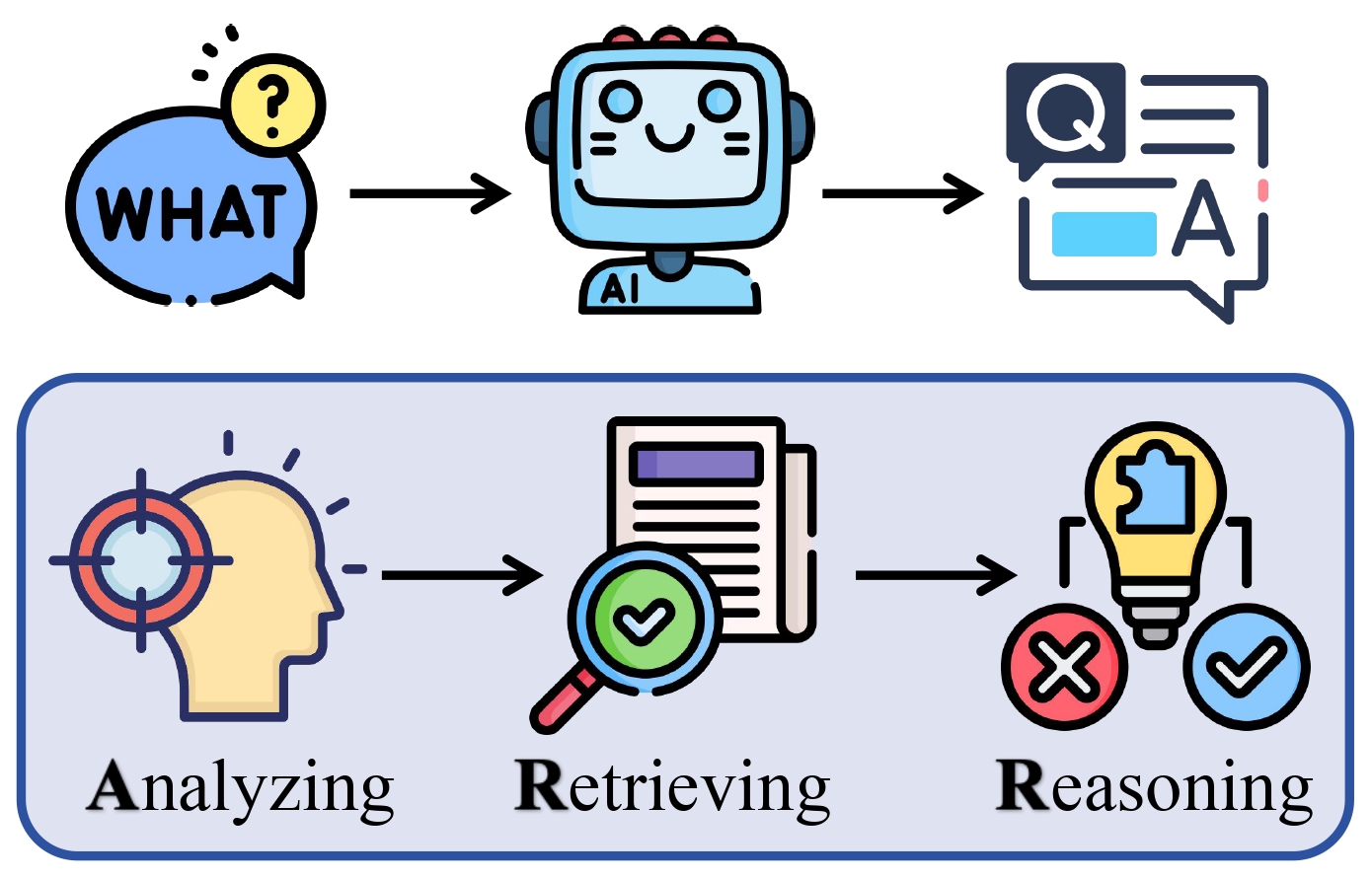

ARR: Question Answering with Large Language Models via Analyzing, Retrieving, and Reasoning

Abstract

Large language models (LLMs) have demonstrated impressive capabilities on complex evaluation benchmarks, many of which are formulated as question-answering (QA) tasks. Enhancing the performance of LLMs in QA contexts is becoming increasingly vital for advancing their development and applicability. This paper introduces ARR, an intuitive, effective, and general QA solving method that explicitly incorporates three key steps: intent analysis, information retrieval, and logical reasoning. Comprehensive evaluations across diverse QA tasks demonstrate that ARR consistently outperforms the baseline methods, verifying the effectiveness and superiority of ARR. Ablation and case studies further validate the positive contributions of each ARR component, and experiments involving variations in prompt design indicate that ARR maintains its effectiveness regardless of the specific prompt formulation. Furthermore, extensive evaluations across various models, benchmarks, and generation configurations solidify the effectiveness, generalizability, and robustness of ARR.

@article{yin2025arr,

title = {ARR: Question Answering with Large Language Models via Analyzing, Retrieving, and Reasoning},

author = {Yin, Yuwei and Carenini, Giuseppe},

journal = {arXiv preprint arXiv:2502.04689},

year = {2025},

url = {https://arxiv.org/abs/2502.04689},

}