Improving Multilingual Neural Machine Translation with Auxiliary Source Languages

Abstract

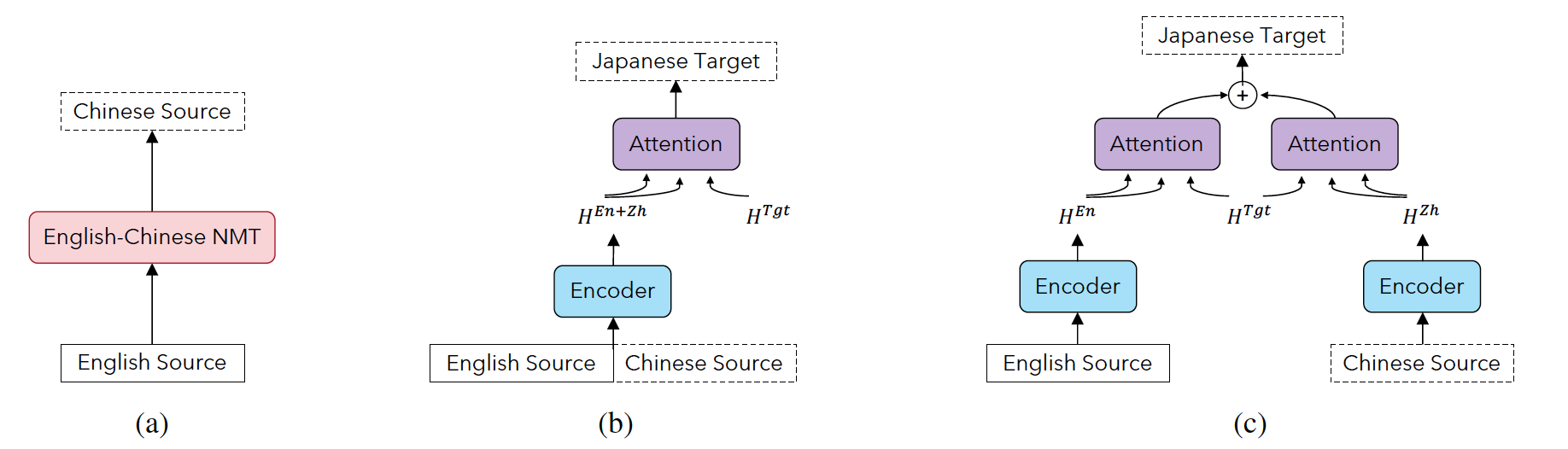

Multilingual neural machine translation models typically handle one source language at a time. However, prior work has shown that translating from multiple source languages improves translation quality. Different from existing approaches on multi-source translation that are limited to the test scenario where parallel source sentences from multiple languages are available at inference time, we propose to improve multilingual translation in a more common scenario by exploiting synthetic source sentences from auxiliary languages. We train our model on synthetic multi-source corpora and apply random masking to enable flexible inference with single-source or bi-source inputs. Extensive experiments on Chinese/English-Japanese and a large-scale multilingual translation benchmark show that our model outperforms the multilingual baseline significantly by up to +4.0 BLEU with the largest improvements on low-resource or distant language pairs.

@inproceedings{auxiliary,

title = "Improving Multilingual Neural Machine Translation with Auxiliary Source Languages",

author = "Xu, Weijia and Yin, Yuwei and Ma, Shuming and Zhang, Dongdong and Huang, Haoyang",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2021",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.findings-emnlp.260",

doi = "10.18653/v1/2021.findings-emnlp.260",

pages = "3029--3041",

}